(I didn’t use ChatGPT for any part of writing this, and there’s no “ha ha actually I did” at the end)

This year, I quit after three years during which I neglected updating my resume or online profiles, didn’t do anything you could consider networking (in fairness, it’s been a weird three years) — all the things you’re supposed to keep up on so you’re prepared, I didn’t do any of it.

And a product person, I wanted to exercise these tools and so I tried to use them in every aspect of my job search. I subscribed, used ChatGPT 4 throughout, and here’s what happened:

ChatGPT was great for:

- Rewriting things, such as reducing a resume or a cover letter

- Interview prep

It was useful for:

- Comparing resumes to a job description and offering analysis

- Industry research and comparison work

I don’t know if it helped at:

- Keyword stuffing

- Success rates, generally

- Success in particular with AI screening tools

It was terrible, in some cases harmful, at:

- Anything where there’s latitude for confabulation — it really is like having an eager-to-please research assistant who has dosed something

- Writing from scratch

- Finding jobs and job resources

This job search ran from May until August of 2023, when I started at Sila.

An aside, on job hunting and the AI arms race

It is incredible how hostile this is on all sides. As someone hiring, the volume of resumes swamped us, many of which are entirely irrelevant to the position, no matter how carefully crafted that job description was. I like to screen resumes myself, and that meant I spent a chunk of every day scanning a resume and immediately hitting the “reject” hotkey in Greenhouse.

In a world where everyone’s armed with tools that spam AI-generated resumes tailored to meet the job description, it’s going to be impossible to do. I might write a follow-up on where I see that going (let me know if there’s any interest in that).

From an applicant standpoint, it’s already a world where no response is the default, form responses months later are frequent, and it’s neigh-impossible to get someone to look at your resume. So there’s a huge incentive to arm up: if every company makes me complete an application process that takes minimum 15 minutes and then doesn’t reply, why not use tools to automate that and then apply to every job?

And a quick caution about relying on ChatGPT in two ways

ChatGPT is unreliable right now, in both the “is it up” sense and the “can you rely on results” sense. As I wrote this, I went back to copy examples from my ChatGPT history and it just would not load them. No error, nothing. This isn’t a surprise — during the months I used it, I’d frequently encounter outages, both large (like right now) and small, where it would error on a particular answer.

When it is working, the quality of that work can be all over the place. There are some questions I got excellent responses to that as I check my work now just perform a web search that’s a reworded query, follow a couple links, and then summarize whatever SEO garbage they ingested.

While yes, this is all in its infancy and so forth, f you have to get something done by a deadline, don’t depend on ChatGPT to get you there.

Then in the “can you rely on it sense” — I’ll give examples as go, but even using ChatGPT 4 throughout, I frequently encountered confabulation. I heard a description of these language models as being eager-to-please research assistants armed with wikipedia and tripping on a modest dose of mushrooms, and that’s the best way to describe it.

Don’t copy paste anything from ChatGPT or any LLM without looking at it closely.

What ChatGPT was great for

Rewriting

I hadn’t done a deep resume scrub in years, so I needed to take add my last three years in and chop my already long and wordy resume down to something humans could read (and here I’ll add if you’re submitting to an Application Tracking System, who cares, try and hit all the keywords) add that in and keep the whole thing to a reasonable length – and as a wordy person with a long career, I needed to get the person-readable version down to a couple pages. ChatGPT was a huge help there, I could feed it my resume and a JD and say “what can I cut out of here that’s not relevant?” Or “help me get to 2,000 words” and “this draft I wrote goes back and forth between present and past tense, can you rewrite this to past tense.”

I’d still want to tweak the text, but there were times where I had re-written something so many times I couldn’t see the errors, and ChatGPT turned out a revision that got me there. And in these cases, I rarely caught an instance of facts being changed.

Interview Prep

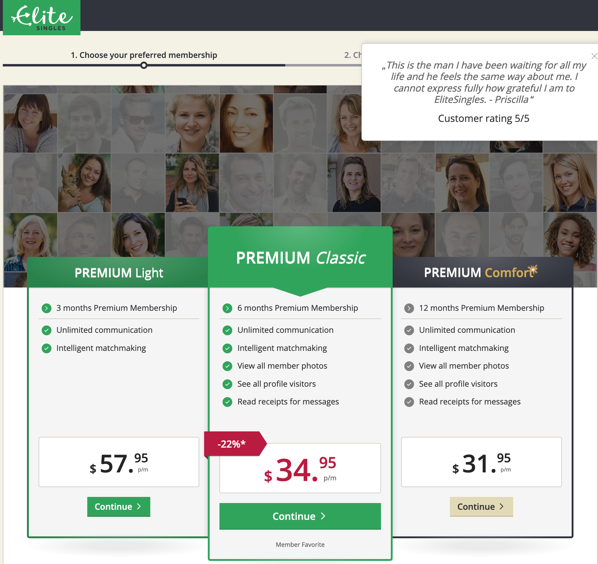

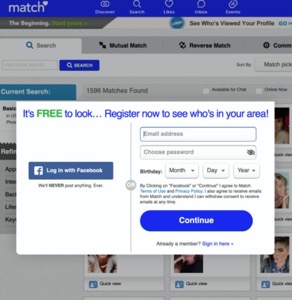

I hadn’t interviewed in years, either, and found trying to get answers off Glassdoor, Indeed, and other sites was a huge hassle, because of forced logins, the web being increasingly unsearchable and unreadable, all that.

So I’d give ChatGPT something along the lines of

Act as a recruiter conducting a screening interview. I’ll paste the job description and my resume in below. Ask me interview questions for this role, and after each answer I give, concisely offer 2-3 strengths and weaknesses of the answer, along with 2-3 suggestions.

This was so helpful. The opportunity to sit and think without wasting anyone’s time was excellent, and the evaluations of the answers were helpful to think about. I did practice where I’d answer out loud to get better at giving my answer on my feet, I’d save good points and examples I’d made to make sure I hit them.

I attempted having ChatGPT drill into answers (adding an instruction such as “…then, ask a follow-up question on a detail”) and I never got these to be worthwhile.

What ChatGPT was useful for

Comparing resumes to a job description and offering analysis

Job descriptions are long, so boring (and shouldn’t be!), often repetitive from section to section, and they’re all structured just differently enough to make the job-search-fatigued reader fall asleep on their keyboards.

I’d paste the JD and the latest copy of my resume in and say “what are the strengths and weaknesses of this resume compared to this job description?” and I’d almost always get back a couple things on both side that were worth calling out, and why:

“The job description repeatedly mentions using Tableau for data analysis work, and the resume does not mention familiarity with Tableau in any role.”

“The company’s commitment to environmental causes is a strong emphasis in the About Us and in the job description itself, while the resume does not…”

Most of these were useful for tailoring a resume: they’d flag that the JD called for something I’d done, but hadn’t included on my resume for space reasons since no one else cared.

It was also good at thinking about what interview questions might come, and what I might want to address in a cover letter.

An annoying downside was frequently flagging something based that a human wouldn’t — I hadn’t expected this from the descriptions of how good LLMs and ChatGPT were at knowing that “managing” and “supervising” were pretty close in meaning. For me, this would be telling me I hadn’t worked in finance technology, even though my last position was at a bank’s technology arm. For a while, I would say “you mentioned this, but this is true” and it would do the classic “I apologize for the confusion…” and could offer another point, but it was rarely worth it — if I didn’t get useful points in the first response, I’d move on.

Industry research and comparison work

This varied more than any other answer. Sometimes I would ask about a company I was unfamiliar with and ask for a summary of its history, competitors, and current products, and I’d get something that checked out 100%, was extremely helpful. Other times it was understandably off — so many tech companies have similar names, it’s crazy. And still other times, it was worthless: the information would be wrong but plausible, or haphazard or lazy.

Figuring out if an answer is correct or not requires effort on your part, but usually I could eyeball them and immediately know if it was worth reading.

It felt sometimes like an embarrassed and unprepared student making up an answer after being called on in class: “Uhhhh yeahhhhh, competitors of this fintech startup that do one very specific thing are… Amazon! They do… payments. And take credit cards. And another issssss uhhhhh Square! Or American Express!”

Again, eager-to-please — ChatGPT would give terrible answers rather than no answer.

I don’t know if ChatGPT helped on

Keyword stuffing

Many people during my job search told me this was amazingly important, and I tried this — “rewrite this resume to include relevant keywords from this job description.” It turned out what seemed like a pretty decent, if spammy-reading, resume, and I’d turn it in.

I didn’t see any difference in response rates when I did this, though my control group was using my basic resume and checking for clear gaps I could address (see above), so perhaps that was good enough?

From how people described the importance of keyword stuffing, though, I’d have expected the response rate to go through the roof, and it stayed at basically zero.

Success rates, generally and versus screening AI

I didn’t feel like there was much of a return on any of this. If I hadn’t felt like using ChatGPT for rewrites wasn’t improving the quality of my resumes as I saw them, I’d have given up.

One of the reasons people told me to do keyword stuffing (and often, that I should just paste the JD in at the end, in 0-point white letters — this was the #1 piece of advice people would give me when I talked to them about job searching) was that everyone was using AI tools to screen, and if I didn’t have enough keywords, in the right proportion, I’d get booted from jobs.

I didn’t see any difference in submitting to the different ATS systems, and if you read up on what they offer in terms of screening tools, you don’t see the kind of “if <80% keyword match, discard” process happening.

I’d suggest part of this is because using LLMs for this would be crazy prejudicial against historically disadvantaged groups, and anyone who did it would and should be sued into a smoking ruin.

But if someone would do that anyway, from my experience here having ChatGPT point out gaps in my resume where any human would have made the connection, I wouldn’t want to trust it to reject candidates. Maybe you’re willing to take a lot of false negatives if you still get true positives to enter the hiring process, but as a hiring manager, I’m always worried about turning down good people.

There are sites claiming to use AI to compare your resume to job descriptions and measure how they’re going to do against AI screening tools — I signed up for trials and I didn’t find any of them useful.

Things ChatGPT was terrible at

Writing from scratch

If I asked “given this resume and JD, what are key points to address in a cover letter?” I would get a list of things, of which a few were great, and then I’d write a nice letter.

If I asked ChatGPT to write that cover letter, it was the worst. Sometimes it would make things up to address the gaps, or offer meaningless garbage in that eager-to-please voice. The making things up part was bad, but even when it succeed, I hate ChatGPT’s writing.

This has been covered elsewhere — the tells that give away that it’s AI-written, the overly-wordy style, the strange cadence of it — so I’ll spare you that.

For me, both as job seeker and someone who has been a hiring manager for years, it’s that it’s entirely devoid of personality in addition to being largely devoid of substance. They read like the generic cover letters out of every book and article ever written on cover letters — because that’s where ChatGPT’s pulling from, so as it predicts what comes next, it’s in the deepest of ruts. You can do some playing around with the prompts, but I never managed to get one I thought was worth reading.

What I, on both sides of the process, want is to express personality, and talk about what’s not on the resume. If I look at a resume and think “cool, but why are they applying for this job?” and the cover letter kicks off with “You might wonder why a marine biologist is interested in a career change into product management, and the answer to that starts with an albino tiger shark…” I’m going to read it, every time, and give some real thought to whether they’d be bringing in a new set of tools and experiences.

I want to get a sense of humor, of their writing, of why this person for this job right now.

ChatGPT responses read like “I value your time at the two seconds it took to copy and paste this.”

And yes, cover letters can be a waste of time. Set aside the case where you’re talking about a career jump — I’d rather no cover letter than a generic one. A ChatGPT cover letter, or its human-authored banal equivalent, says the author values the reader’s time not at all, while a good version is a signal that they’re interested enough to invest time to write something half-decent.

Don’t use ChatGPT to write things that you want the other person to care about. If the recipient wants to see you, or even just that you care about the effort of your communication, don’t do it. Do the writing yourself.

For anything where there’s latitude for confabulation

(And there’s always latitude for confabulation)

If you ask ChatGPT to rewrite a resume to better suit a job description, you’ll start to butt up against it writing the resume to match the job description. You have to watch very closely.

I’d catch things like managerial scope creep: if you say you lead a team, on a rewrite you might find that you were in charges of things often associated with managing that you did not do. Sometimes it’s innocuous: hey, I did work across the company with stakeholders! And sometimes it’s not: I did not manage pricing and costs across product lines, where did that come from?

The direction was predictable, along the eager-to-please lines — always dragging it towards what it perceived as a closer match, but it often felt like a friend encouraging you to exaggerate on your resume, and sometimes, to lie entirely. I didn’t like it.

When I was doing resume rewriting, I made a point to never use text immediately, when I was in the flow of writing, because I’d often look back at a section of the resume and think “I can’t submit that, that’s not quite true.”

That’s annoying, right? A thing you have to keep an eye on, drag it back towards the light, mindful that you need to not split the difference, to always resist the temptation to let it go.

Creepy. Do not like.

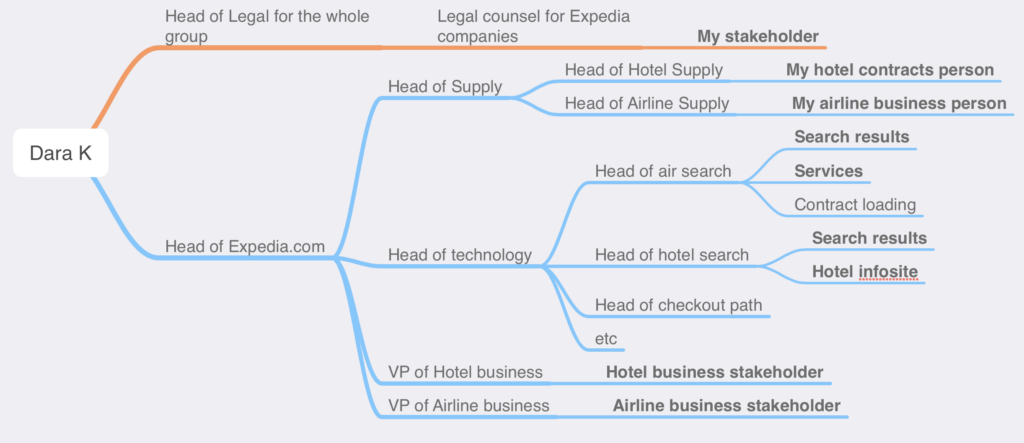

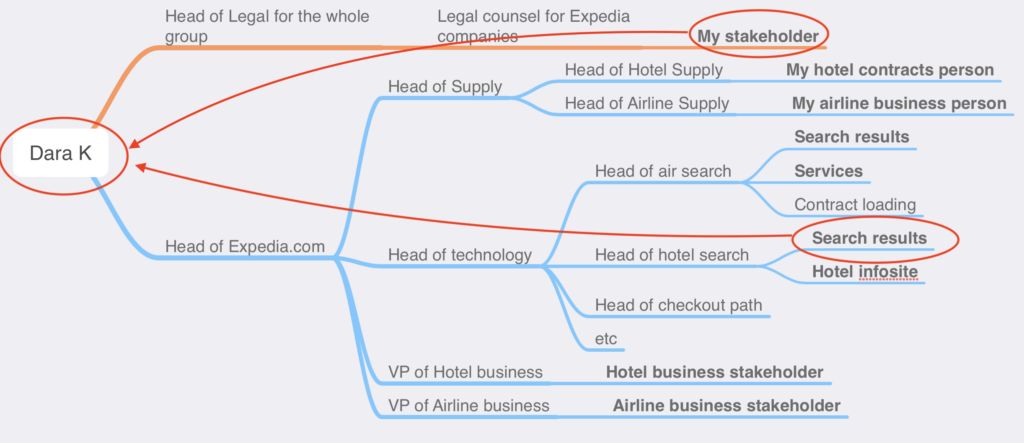

In some circumstances it’s wild, though — I tried to get fancy with it and have it ask standard interview questions and then, based on my resume, answer as best it could. I included a “if there’s no relevant experience, skill, or situation in the resume, please say you don’t know” clarification. And it would generally do okay, and then asked about managing conflicting priorities, described a high-stakes conflict between the business heads and the technology team where we had to hit a target but we had to do a refactor, and ChatGPT entirely made up a whole example situation that followed the STAR (situation, task, action, response) model for answering, with a happy conclusion for everyone involved.

Reminded that that didn’t happen and to pass on questions it didn’t have a good response to, ChatGPT replied “Apologies for the confusion, I misunderstood the instructions…” and then restated the clarification to my satisfaction, and we proceeded. It did the same thing two questions later: totally made up generic example of a situation that could have happened at my seniority level.

If I’d just been pasting in answers to screener questions, I’d have claimed credit for results never achieved, and been the hero in crises that never occurred. And if I’d been asked about them, they’re generic enough someone could have lied their way though it for a while.

No one wants to be caught staring at their interviewer when asked “this situation with the dinosaur attack on your data center is fascinating, can you tell me more about how you quarterbacked your resiliency efforts?”

My advice here — don’t use it in situations like this. Behavioral questions proved particularly prone, but any time there was a goal like “create an answer that will please the question-asker” strange behavior started coming out of the woodwork. It’s eager to please, it wants to get that job so so badly!

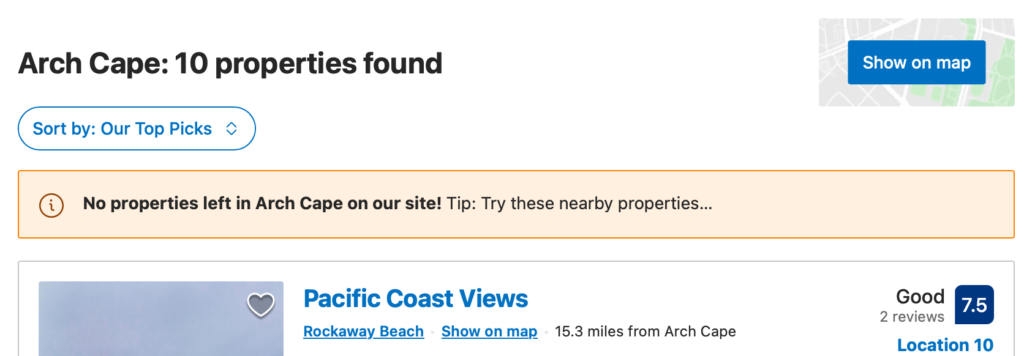

Finding for jobs and job resources

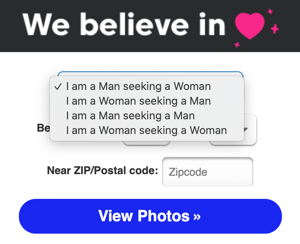

Every time I tried looking for resources specific to Product Management jobs, the results were garbage “Try Indeed!” I’d regenerated and get “Try Glassdoor and other sites…” In writing this I went back to try again, and it’s now only almost all garbage still —

LinkedIn: This platform is not only a networking site but also a rich resource for job listings, including those in product management. You can find jobs by searching for “product management” and then filtering by location, company, and experience level. LinkedIn also allows you to network with other professionals in the field and join product management groups for insights and job postings.

But… regenerating the response amongst the general-purpose junk I got it to mention Mind the Product, a conference series with a job board, after it went through the standard list of things you already know about. Progress?

I got similarly useless results, when I was looking for jobs with particular fields, like climate change or at B-corps (“go find a list of B-corporations!”). It felt frustratingly like it wasn’t even trying, which — you have to try not to anthropomorphize the tool, it’s not helpful.

It is though another example of how ChatGPT really wants to please: it does not like saying “I don’t know” and would rather say “searching the web will turn up things, have you tried that?”

What I’d recommend

Use the LLM of your choice for:

- Interview preparation, generally and for specific jobs

- Suggestions for tailoring your resume

- Help editing your resume

And keep an eye on it. Again, imagine you’ve been handed the response by someone with a huge grin, wide eyes with massively dilated pupils, an expectant expression, and who is sweating excessively for no discernible reason.

I got a lot out of it. I didn’t spend much time in GPT 3.5, but it seemed good enough for those tasks compared to GTP4. When I tried some of the other LLM-based tools, they seemed much worse — my search started May 2023, though, so obviously, things have already changed substantially.

And hey, if there are better ways to utilize these tools, let me know.