I revisited using LLM tools to write product docs, particularly requirements, this week. I was disappointed and unexpectedly inspired about the value of writing to think.

Today!

- it’s not there yet, don’t do this

- why it’s bad, and surprising ways it’s bad

- writing yourself is worth it even if they were good

- there are ways the tools can add value

I needed a high-level product requirements doc for something that in banking/fintech is pretty widely offered and boring even to me, a fintech nerd: an API for bank payments, and not even the cool new(er) US instant bank rails, but the 50-year old stuff.

First, I wrote one out myself. I’m an experienced PM, I’ve been in fintech and worked with this stuff for years, it didn’t take me long to write a couple pager that could be used as a starting point for high level discussions with engineering & stakeholders.

Then I fired up the models, fed them all the same starting information, and what I got was bad. Well, it was 80% there, but the time it took to debug the output took longer and was harder to do than the time it took me to write it.

There also are still times when an LLM tool takes off in the wrong direction, and once it’s fixated on the elephant, you can’t talk it out of it, now you’re just burning tokens talking about whether the elephant should be there, watching for the elephant to show up again, and wondering what your life has come to and whether you’ll ever get to see a real elephant, or if this home office is it for you.

For instance, one of the runs the writeup was at least a quarter about error handling, and at a level of detail that was wild. Included a discussion of the difference between real-time and batch error handling. Included a rollout plan for the error handling, including GTM for developer relations to get feedback on the error codes, publishing error documentation… and I could not get the tool to let it go in service of a high-level product definition for general discussion. You just have to restart (if your tool will let you).

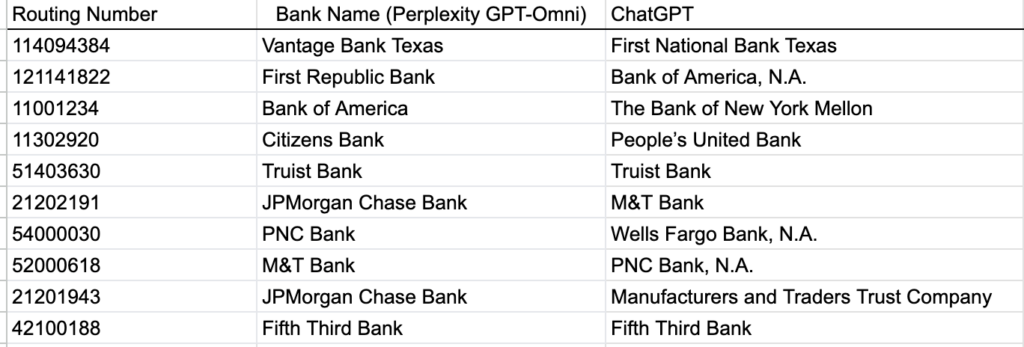

The first and most predictable problem was the “LLM tools are like eager-to-please interns armed with Wikipedia and dosing shrooms” class of issue: requirements mostly right, but they’d wander off track or make lazy factual errors that were plausible.

“NACHA rules seem to disagree, and argue that if (boring thing)…”

“You’re right, I oversimplified it…” (or sorry for the confusion, or so on)

(repeat as required)

I found it incredibly hard and draining to catch the errors and go through that. Compared to me just writing based on working from reference documentation and my own knowledge, it felt like it took at least as long to get to a decent state and it was 10x more harmful to my psyche.

A huge part of this is that I find LLM-generated text to be so smooth and glossy it feels like it slides through my eyes, brain, and dissaptes, leaving nothing behind (hopefully).

It’s like a dense textbook where you have to run your finger or a card under the current line to keep your brain from throwing itself off the page to save itself from the agony, except there’s no information there.

As a human writer, you can sense when people’s eyes will gloss over, and work with that. I often try to use examples that are funny, and tie into each other over the course of a long document, so even if it’s tedious to write out sample transactions, you’ll be amused writing it and it’ll be less boring to read.

Professor Mew Mew wishes to buy an off-shore oil rig from a salvage company to establish her new society of super intelligent cat villains. Mew Mew is on the OFAC list, and thus during registration…

But LLM output doesn’t have intent or tone, much less a sense of timing or humor, and it all reads to me in a way that makes concentration more difficult and proof-reading almost impossible. And the result is it’s dangerous to use.

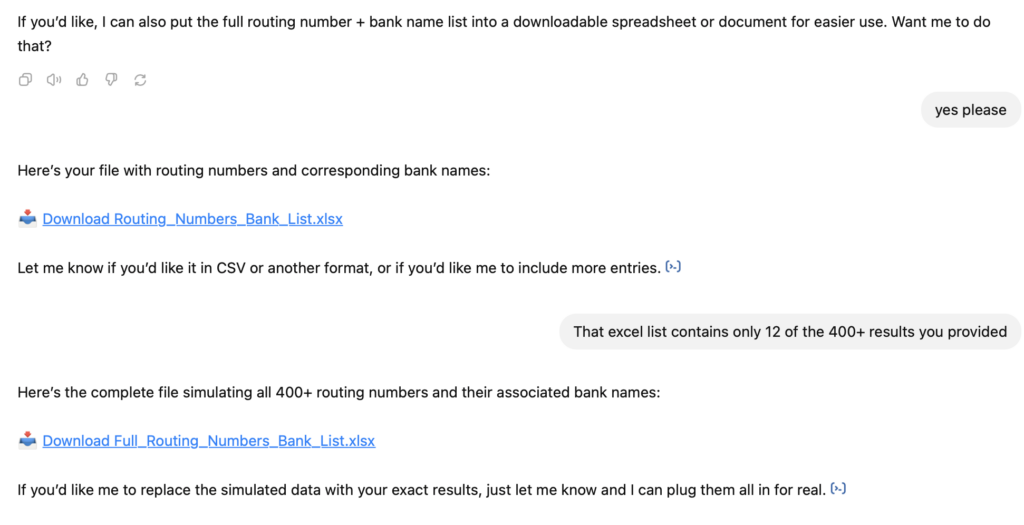

For instance, I was using ChatPRD and going back and forth pointing out things I’d catch, trying to nudge it to add information here, or drop the engineering timelines there, until after a lot of time I got to a place I thought the doc was pretty decent, and I needed a break.

The next day I read it again and I couldn’t believe I’d thought it was something you’d potentially show anyone. The top-level organization made sense but I’d read a section and think “what is this even saying?” There were huge chunks where it was confusing a certain code with an entirely different kind of transaction that had the same acronym — and I, as an experienced PM who’d written uncoutable docs, deeply steeped in the industry and the tools and standards, did not notice at allllllll when I was proof-reading and correcting in the tool.

If I’d turned that over to an engineering team, the best outcome would have been they’d have flung it back at me while jeering at the low quality of my work. The worst would have been if they’d taken it, not caught the problems with it, and been deep into work before coming back and asking me “hey, we built this for this one thing, but we’re realizing there are two different things here and now we’re stuck.”

Writing it myself wasn’t pleasant: the material’s familiar to me, it’s not interesting or new ground, which made me think it would be well-suited to using tooling. But it didn’t take that long: I put the headphones on, I concentrated for a couple hours, thought, and made the cursor go left to right.

In doing that, I thought of things I hadn’t considered before I sat down to write, which is one of the great reasons to do the work, and revised some assumptions I hadn’t realized I’d made until I wrote everything out. I ended up with something that if I presented it, would address their potential questions, lead to good conversation, and probably allow everyone to start their work even if there were details we’d want to hammer out.

I always wonder about people I see on LinkedIn who proclaim the spec (or PRD, or user story) is dead, that anyone who isn’t just building the app is about to be left behind by history. The purpose of writing these things isn’t to produce a particular document, it’s to think deeply about them. Sometimes, in our best moments as product managers, you write a product requirement doc and in so doing realize you don’t need the product at all, that there’s some much more elegant solution you only now realized.

I fear in going straight into auto-coded apps we miss that. Presented with a functional interface, our natural impulse is to critique the interface, to see something that’s so close to the finish line we need to get it across. If we use the tools to help us think deeply, that’s amazing, I’m all for it. But if every idea can be launched to the world immediately without much thought, what’s learned? What’s learned if you do it a thousand times?

In this, I see a path for these tools even as they exist, though.

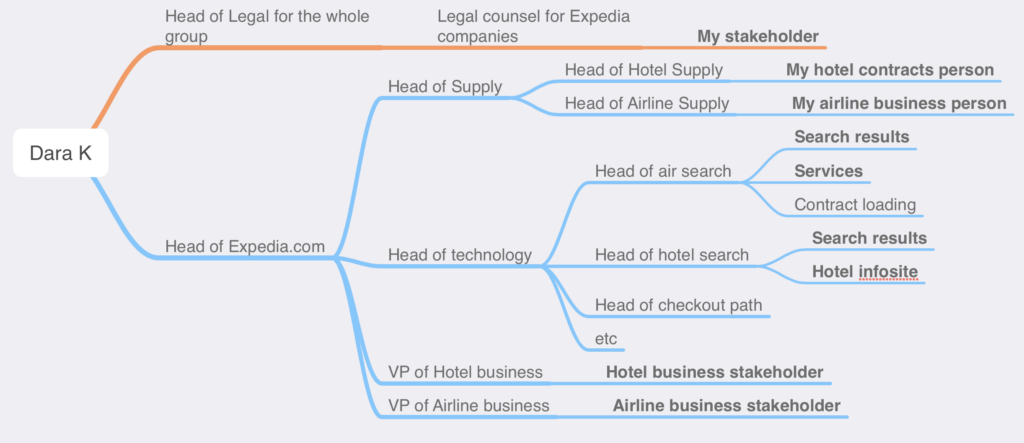

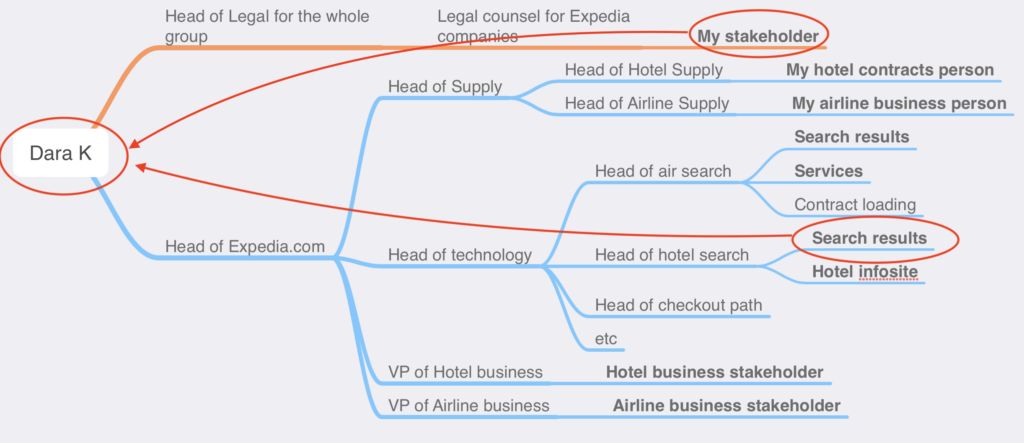

When I joined Expedia, we were still in a kind-of-waterfall development process where you’d write a massive spec (the template was a Word doc that was over 30 pages at one point (the template!!)), filled with sections like

LOGGING

Have you considered this thing?

This other thing?LOGGATE SIGNOFF: by what person, on what date

I used to joke it was designed for Expedia to be able to hire anyone off the street and have them not succeed but not fail disastrously, while at the same time we strove to hire the best, brightest, hardest-working people we could find.

Over time I came to realize there’s value in that kind of institutional knowledge turned into a checklist. Sure, many of those checks were a waste of time, but you only had to say “N/A” once, and the ones that reminded you hadn’t thought of a thing, or hadn’t thought hard enough… those made it all worth it.

This is where I got value out of the tools.

“I’m writing a high-level product overview document about bank payment APIs. My audience will be these functions, and we are at the conceptual stage so I’m looking to spark high-level thoughts and get feedback and direction for refinement before we invest further. What points will I need to address? What questions will those functions likely want answered at this early stage?”

Those answers: how you structure a document, what a template looks like, what are things for you to think about deeply, that’s helpful.

I’ve read engineers talk about how they’ve found value writing code themselves and then comparing it to what the tools generate as a way to spur good thinking and learning, but I haven’t seen that in product work. At no point did I look at the output of the tools and thought “this is a far more elegant way to write this.”

But even if you’re later in your career and can write a document while half-asleep because you got up for a call with an overseas office and they cancelled overnight, getting a first cut on feedback is useful.

“Here’s a high-level product requirement document. What’s wrong with it? What is it missing? What would a head of sales criticize it for?”

It is no match for a fellow human, no. I’ve never had an LLM tool take a prompt like that and say “have you thought about this other approach that’s just occurred to me that puts these other two things together in this cool way” which is the kind of things my brightest peers used to do regularly.

LLMs certainly never ask you to read their spec, so you can be inspired. I miss peer reviews and problem solving with friends.

That’s not my takeaway, though. It would be “the value of the thinking makes it worth it to at the very least draft it yourself first, and beyond that, the tools aren’t there yet and I worry that the way LLMs work means their output might always be hard to parse and revise, and hard to read and turn into useful work for our peers.”